Unit Tests

LangSmith unit tests are assertions and expectations designed to quickly identify obvious bugs and regressions in your AI system. Relative to evaluations, tests are designed to be fast and cheap to run, focusing on specific functionality and edge cases. We recommend using LangSmith to track any unit tests that touch an LLM or other non-deterministic part of your AI system.

@unit currently requires langsmith python version >=0.1.42. If you are interested in unit testing functionality in TypeScript or other languages, please let us know at support@langchain.dev.

Write @unit test

To write a LangSmith unit test, decorate your test function with @unit.

If you want to track the full nested trace of the system or component being tested, you can mark those functions with @traceable. For example:

# my_app/main.py

from langsmith import traceable

@traceable # Optional

def generate_sql(user_query):

# Replace with your SQL generation logic

# e.g., my_llm(my_prompt.format(user_query))

return "SELECT * FROM customers"

Then define your unit test:

# tests/test_my_app.py

from langsmith import unit

from my_app.main import generate_sql

@unit

def test_sql_generation_select_all():

user_query = "Get all users from the customers table"

sql = generate_sql(user_query)

assert sql == "SELECT * FROM customers"

Run tests

You can use a standard unit testing framework such as pytest (docs) to run. For example:

pytest tests/

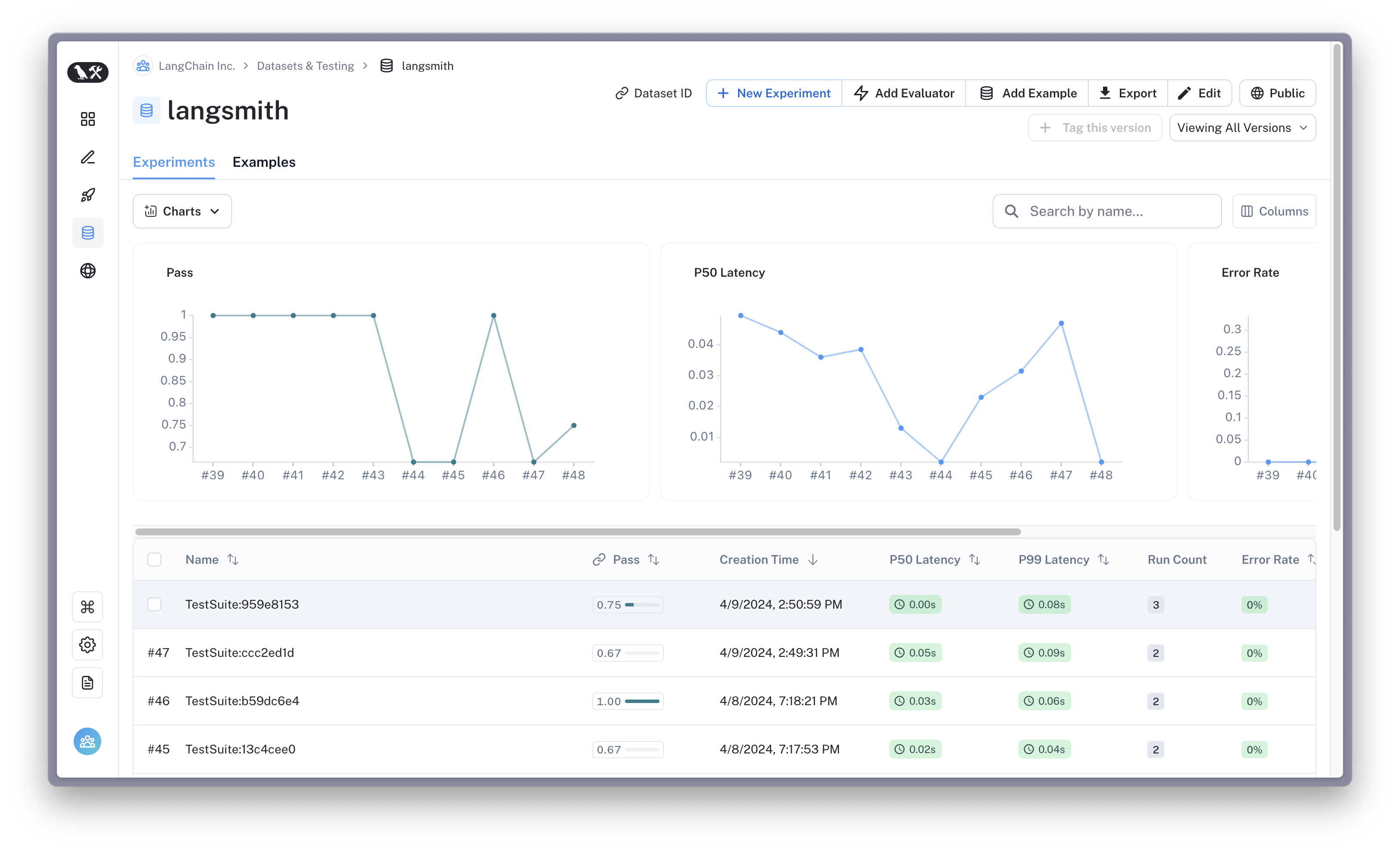

Each time you run this test suite, LangSmith collects the pass/fail rate and other traces as a new TestSuiteResult, logging the pass rate (1 for pass, 0 for fail) over all the applicable tests.

The test suite syncs to a corresponding dataset named after your package or github repository.

Going Further

@unit is designed to stay out of your way and works well with familiar pytest features. For example:

Defining inputs as fixtures

Pytest fixtures let you define functions that serve as reusable inputs for your tests. LangSmith automatically syncs any test case inputs defined as fixtures. For example:

import pytest

@pytest.fixture

def user_query():

return "Get all users from the customers table"

@pytest.fixture

def expected_sql():

return "SELECT * FROM customers"

# output_keys indicate which test arguments to save as 'outputs' in the dataset (Optional)

# Otherwise, all arguments are saved as 'inputs'

@unit(output_keys=["expected_sql"])

def test_sql_generation_with_fixture(user_query, expected_sql):

sql = generate_sql(user_query)

assert sql == expected_sql

Parametrizing tests

Parametrizing tests lets you run the same assertions across multiple sets of inputs. Use pytest's parametrize decorator to achieve this. For example:

@unit

@pytest.mark.parametrize(

"user_query, expected_sql",

[

("Get all users from the customers table", "SELECT * FROM customers"),

("Get all users from the orders table", "SELECT * FROM orders"),

],

)

def test_sql_generation_parametrized(user_query, expected_sql):

sql = generate_sql(user_query)

assert sql == expected_sql

Note: as the parametrized list grows, you may consider using evaluate() instead. This parallelizes the evaluation and makes it easier to control individual experiments and the corresponding dataset.

Expectations

LangSmith provides an expect utility to help define expectations about your LLM output. For example:

from langsmith import expect

@unit

def test_sql_generation_select_all():

user_query = "Get all users from the customers table"

sql = generate_sql(user_query)

expect(sql).to_contain("customers")

This will log the binary "expectation" score to the experiment results, additionally asserting that the expectation is met possibly triggering a test failure.

expect also provides "fuzzy match" methods. For example:

@unit

@pytest.mark.parametrize(

"query, expectation",

[

("what's the capital of France?", "Paris"),

],

)

def test_embedding_similarity(query, expectation):

prediction = my_chatbot(query)

expect.embedding_distance(

# This step logs the distance as feedback for this run

prediction=prediction, expectation=expectation

# Adding a matcher (in this case, 'to_be_*"), logs 'expectation' feedback

).to_be_less_than(0.5) # Optional predicate to assert against

expect.edit_distance(

# This computes the normalized Damerau-Levenshtein distance between the two strings

prediction=prediction, expectation=expectation

# If no predicate is provided below, 'assert' isn't called, but the score is still logged

)

This test case will be assigned 4 scores:

- The

embedding_distancebetween the prediction and the expectation - The binary

expectationscore (1 if cosine distance is less than 0.5, 0 if not) - The

edit_distancebetween the prediction and the expectation - The overall test pass/fail score (binary)

The expect utility is modeled off of Jest's expect API, with some off-the-shelf functionality to make it easier to grade your LLMs.

Dry-run mode

If you want to run the tests without syncing the results to LangSmith, you can set LANGCHAIN_TEST_TRACKING=false in your environment.

LANGCHAIN_TEST_TRACKING=false pytest tests/

The tests will run as normal, but the experiment logs will not be sent to LangSmith.

Caching

LLMs on every commit in CI can get expensive. To save time and resources, LangSmith lets you cache results to disk. Any identical inputs will be loaded from the cache so you don't have to call out to your LLM provider unless there are changes to the model, prompt, or retrieved data.

To enable caching, run with LANGCHAIN_TEST_CACHE=/my/cache/path. For example:

LANGCHAIN_TEST_CACHE=tests/cassettes pytest tests/my_llm_tests

All requests will be cached to tests/cassettes and loaded from there on subsequent runs. If you check this in to your repository, your CI will be able to use the cache as well.

Using watch mode

With caching enabled, you can iterate quickly on your tests using watch mode without worrying about unnecessarily hitting your LLM provider. For example, using pytest-watch:

pip install pytest-watch

LANGCHAIN_TEST_CACHE=tests/cassettes ptw tests/my_llm_tests